How we use funds

We keep openQbank lean and cost-efficient. Funds are used for infrastructure and AI-related costs.

Infrastructure

Hosting, storage, monitoring, backups, and database reliability upgrades.

AI costs

Model inference, embeddings, evaluation runs, and safety/quality checks.

Giving back

Any and all profits above these operating costs are donated to Khan Academy.

Quality assurance is community-driven through volunteer verification and feedback. We do not allocate funds to paid QA.

Planned infrastructure migration

SQLite is fantastic for single-user and low-concurrency deployments, but it can hit write-lock contention as usage grows (especially with multiple workers). PostgreSQL is the simplest “real” upgrade for safe concurrent reads/writes.

This migration also includes moving from our current hosting provider to Google Cloud Platform. The goal is to enable stronger reliability guarantees and unlock product capabilities like real-time features and OAuth sign-in.

What changes (and what doesn’t)

- Fewer lock errors under real multi-user load.

- Safer background jobs and future async workflows.

- Preserve the questions table. Other tables can be recreated/reset if needed.

- Rollback-friendly cutover: the app can fall back if a deploy goes sideways.

Question bank v2: RAFT-based question engine

The next generation of openQbank focuses on consistency, grounded explanations, and better coverage of “why” a choice is right or wrong, without turning every question into a long essay.

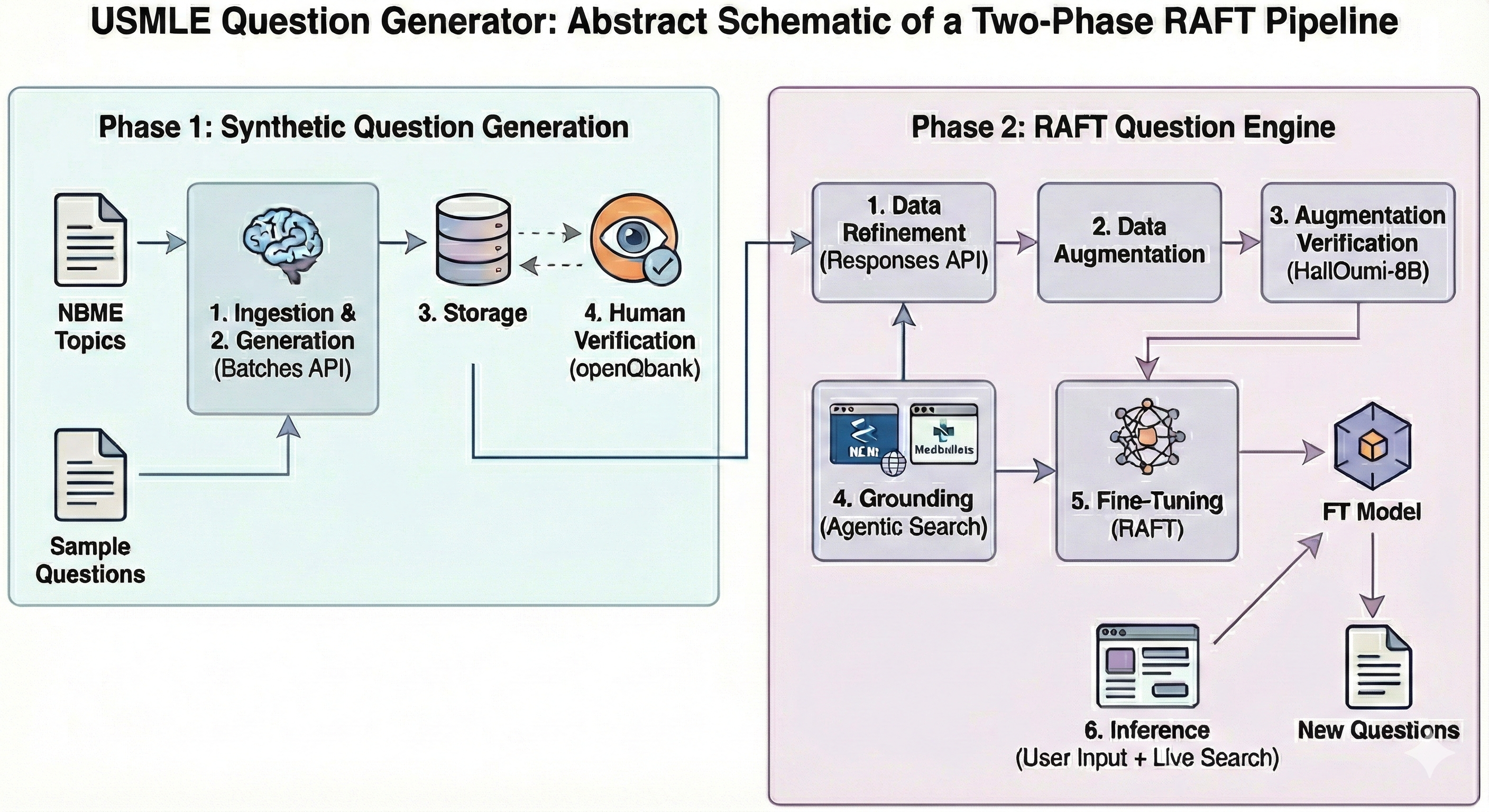

Phase 1: Synthetic question generation (human-in-the-loop)

- Ingest topics + examples and generate questions in batches.

- Store outputs and track revisions/metadata.

- Human verification to ensure clinical plausibility, clarity, and NBME-like style.

We are here: openQbank today is primarily this human verification layer, turning raw model generations into a curated, exam-style bank you can trust.

Phase 2: RAFT question engine (grounded + trainable)

- Data refinement to normalize format, enforce style constraints, and remove weak items.

- Data augmentation for coverage and robustness (variants, distractors, differentials).

- Augmentation verification to reduce hallucinations and enforce factuality.

- Grounding via agentic search (web + trusted sources) so explanations are citeable.

- RAFT fine-tuning so the model internalizes the format and evidence-handling behavior.

- Inference with live search when needed for up-to-date or niche details.

The goal is a system that can generate high-quality questions and explanations reliably, while keeping a clear chain of evidence for users who want to verify and learn deeper.

Donate

Donations help subsidize API costs and hosting fees, ensuring that unlimited question access can remain as cheap as possible.

You will be redirected to Stripe Checkout.